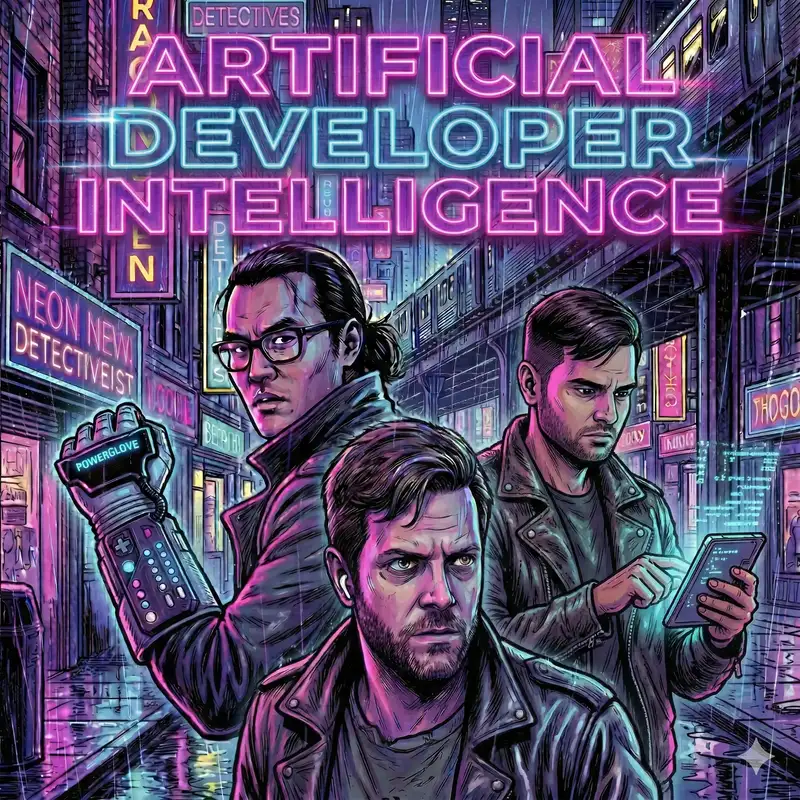

Episode 9: Chinese Models 7 Months Behind US Labs, Token Efficient Languages, and LLM Problems Observed in Humans

The podcast "Artificial Developer Intelligence" features hosts Shimin Zhang and co-host Dan Lasky discuss the evolving landscape of AI in programming, recent news, innovative tools, and the implications of AI on various sectors. They explore the partnership between Apple and Google, the concept of 'doom coding', and how humans make LLM like mistakes. The conversation also delves into the efficiency of programming languages, a deep dive into dynamic large concept models, and the societal perceptions of AI, culminating in a discussion about the potential AI bubble.

Takeaways

- Apple's partnership with Google marks a significant shift in AI development.

- Doom coding encourages productive use of time instead of doom scrolling.

- Public perception of AI is heavily influenced by marketing hype.

- Programming languages vary in token efficiency, affecting AI interactions.

- Dynamic large concept models offer a new approach to language processing.

Resources Mentioned

Google’s Gemini to power Apple’s AI features like Siri

Chinese AI models have lagged the US frontier by 7 months on average since 2023

doom-coding

TimeCapsuleLLM

Emergent Behavior: When Skills Combine

LLM problems observed in humans

Which programming languages are most token-efficient?

Dynamic Large Concept Models: Latent Reasoning in an Adaptive Semantic Space

‘We’ve Done Our Country a Great Disservice’ by Offshoring: Nvidia’s Jensen Huang Says ‘We Have to Create Prosperity’ for All, Not Just PhDs

Computer scientist Yann LeCun: “Intelligence really is about learning”

Are we in an AI bubble? What 40 tech leaders and analysts are saying, in one chart

Chapters

Connect with ADIPod

Google’s Gemini to power Apple’s AI features like Siri

Chinese AI models have lagged the US frontier by 7 months on average since 2023

doom-coding

TimeCapsuleLLM

Emergent Behavior: When Skills Combine

LLM problems observed in humans

Which programming languages are most token-efficient?

Dynamic Large Concept Models: Latent Reasoning in an Adaptive Semantic Space

‘We’ve Done Our Country a Great Disservice’ by Offshoring: Nvidia’s Jensen Huang Says ‘We Have to Create Prosperity’ for All, Not Just PhDs

Computer scientist Yann LeCun: “Intelligence really is about learning”

Are we in an AI bubble? What 40 tech leaders and analysts are saying, in one chart

Chapters

Connect with ADIPod

- Email us at humans@adipod.ai you have any feedback, requests, or just want to say hello!

- Checkout our website www.adipod.ai